Can AI Explain Company Performance?

A Horserace of AI Models

June 2023. Reading Time: 10 Minutes. Authors: Wachi Bandara, PhD, Anshuma Chandak, Brandon Flannery.

SUMMARY

- The rapid evolution of language models has the potential to revolutionise financial analysis

- GPT outperformed when analyzing earnings calls, followed by Word2Vec and BERT

- However, overall models should be selected carefully as each has its pros and cons

ABSTRACT

This paper aims to evaluate the quality of word vectors produced by different word embedding models on two text similarity related financial analysis tasks: company identification and explanation of earnings surprises (assessed by standardized unexpected earnings (SUE)). In the company identification task, we explore language models’ ability to identify the company by comparing its earnings call and different sections of its 10-K report with the business section of the 10-K report of randomly chosen companies. In the SUE task, we explore different language models’ ability in explaining a firm’s standardized unexpected earnings using text from their respective earnings call presentation section.

Specifically, this study surveys the quality of popular word embedding models on text from earnings calls and 10-K filings. The results of this survey suggest that transformer-based models like GPT and BERT models outperform others in both the text similarity related tasks of explaining earnings surprises and identifying companies from earnings calls.

1. INTRODUCTION

There has been a great deal of excitement around the Generative Pre-trained Transformer (GPT) models, with ChatGPT taking center stage. Ever since its introduction in November 2022 [1], this AI chatbot has gained remarkable popularity across various platforms and domains. Open AI’s technical report [2] reports that GPT-4 exhibits human-level performance on the majority of these professional and academic exams. Notably, it passes a simulated version of the Uniform Bar Examination with a score in the top 10% of test takers This suggests that sophisticated large language models (LLMs) can potentially be a useful virtual research assistant for financial tasks. The question we address in this paper relates to the performance of these models when the task moves beyond simple recall.

Early studies using term frequency vectors established strong relationships between word occurrences and future firm returns. Research has demonstrated the effectiveness of using word embeddings in identifying changes in business operations that proceed to lower firm returns, lower profitability, and bankruptcies [3]. Joshua Lee’s Can Investors Detect Managers’ Lack of Spontaneity? Adherence to Pre-determined Scripts during Earnings Conference Calls [4] used natural language processing to analyze the tone and content of earnings conference calls and found evidence that adherence to scripts can lead to negative future firm performance.

Many argue that transformer-based language models, such as BERT and GPT embeddings, are superior to traditional term frequency (TF) models because they can capture the contextual relationships between words and their meanings. Attention mechanisms enable the models to focus on relevant words and phrases in a sentence and assign them weights based on their importance in predicting the output. This approach is particularly useful in finance, where the meaning of words can be highly dependent on the context and surrounding information.

Several recent studies have demonstrated the effectiveness of transformer-based models in financial prediction. For instance, Huang et al. [5] shows that BERT and FinBERT outperform more basic language models at tasks of text summarization and classification. ChatGPT outperforms traditional sentiment analysis methods in predicting stock market returns [6]. These studies demonstrate the superiority of transformer-based models in capturing complex relationships and explaining as well as predicting financial outcomes.

2. METHODOLOGY

In this study, we aim to explore the performance of several word embedding models in two different tasks: company identification and explaining earnings surprises. We utilize six different language models for creating word embeddings: OpenAI’s GPT-3.5, BERT, FinBERT, LSI, Word2Vec, and Doc2Vec.

2.1 DATASETS

We utilize two primary public datasets for our analysis: the form 10-K annual filings and Earnings call transcripts. The sample period begins in January 2019 and ends in February 2023.

The Form 10-K is an annual filing that comprehensively describes a company’s performance for a fiscal year. All US domestic companies are mandated by the Securities and Exchange Commission (SEC) to file a 10-K each fiscal year. The sections in a 10-K may vary slightly depending upon the company, but in general, a 10-K includes business, risk factors, management’s discussion and analysis (MD&A), financial statements, executive compensation, corporate governance, and legal proceedings among others.

An earnings call is a quarterly conference call between a publicly traded company’s management and other stakeholders including analysts and investors to discuss the firm’s financial performance for that quarter. The call typically includes several sections: executive presentation followed by a presentation of the financial results and finally a question-and-answer session during which analysts and investors can ask questions about the results and the company’s operations. In this study, we are going to use the business presentation section because it is the most scripted portion of the call.

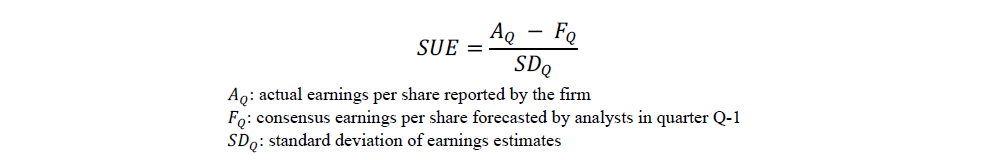

Standardized Unexpected Earnings (SUE) is a widely used measure to capture the magnitude of earnings surprises, which is calculated as the difference between actual earnings per share and consensus earnings per share, divided by the standard deviation of the consensus estimate.

2.2 DATA PREPROCESSING

Basic preprocessing steps like lower-casing, stop-word removal, and filtering characters, punctuations and numbers were done. English stop-words were taken from NLTK library [7].

2.3 WORD EMBEDDINGS

Word embeddings are vector representations of a word obtained by training a neural network on a large corpus. The models that we have utilized in this analysis are as follows :

1. Word2Vec: A neural network-based language model used to represent words and phrases as numerical vectors[8].

2. Doc2Vec: A neural network-based language model used to represent documents as numerical vectors [9].

3. LSI: Latent semantic indexing (LSI) is an information retrieval technique based on the spectral analysis of the term-document matrix [10].

4. BERT: A pre-trained language model based on the transformer architecture using a masked language modeling approach to pre-train a deep neural network on large amounts of text data. The text was tokenized using a pre-trained HuggingFace[11] tokenizer (Sentence-BERT). The tokenizer weights were frozen and not modified during fine-tuning and training.

5. FinBERT: A language model specifically designed for financial sentiment analysis and financial text classification tasks. It is based on the Bidirectional Encoder Representations from Transformers (BERT) architecture [5].

6. GPT-3.5: We have used OpenAI’s GPT-3.5 class embedding model called text-embedding-ada-002 [12]. This latest embedding model is 10 times cheaper than earlier embedding models, more performant, and capable of indexing approximately 10 pages into a single vector embedding.

2.4 IMPLEMENTATION DETAILS

2.4.1 COMPANY IDENTIFICATION

In the first task of company identification using embeddings, we expect the earnings call to be most similar to the 10-K document of the company it belongs to, which can be identified by calculating the cosine similarity between the earnings call business presentation section and 10-K document embeddings. This is based on the assumption that the language used in the earnings call and the 10-K document should be similar, as they both pertain to the same company.

Therefore, the company whose 10-K document has the highest cosine similarity score with the earnings call is likely to be the one the earnings call belongs to. While there may be other factors that affect the similarity score, such as differences in language use or presentation style, using cosine similarity as a likelihood estimate is a simple and effective method for identifying the company in financial earnings calls.

2.4.2 EXPLAINING EARNINGS SURPRISE

In the second task, we aim to explain earnings surprises using the earnings call presentation sections. Standardized Unexpected Earnings (SUE) is a widely used measure to capture the magnitude of earnings surprises.

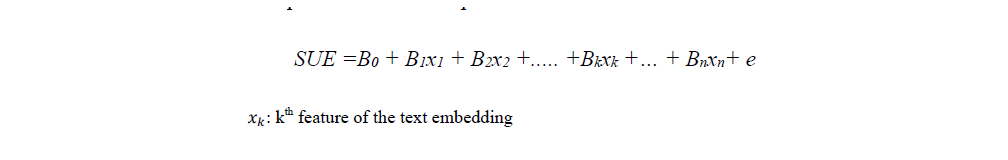

On average, SUE scores are positive, indicating that analysts tend to underestimate earnings and revenue data points. In this experiment, we trained linear models to establish relationships between embedding features and contemporaneous SUE scores. Each month we create a training set using the previous two years of data. As discussed above, we trained a linear regression model with the SUE score as the dependent variable and the embedding components as the independent variables.

We then used this regression fit to create expected earnings surprise scores for the contemporaneous month’s earnings report. Our expectation is that, for sufficiently sophisticated language models, there is a strong positive relationship between expected and actual SUE scores.

3. RESULTS

In this section, we present the results related to the aforementioned two tasks.

3.1 COMPANY IDENTIFICATION

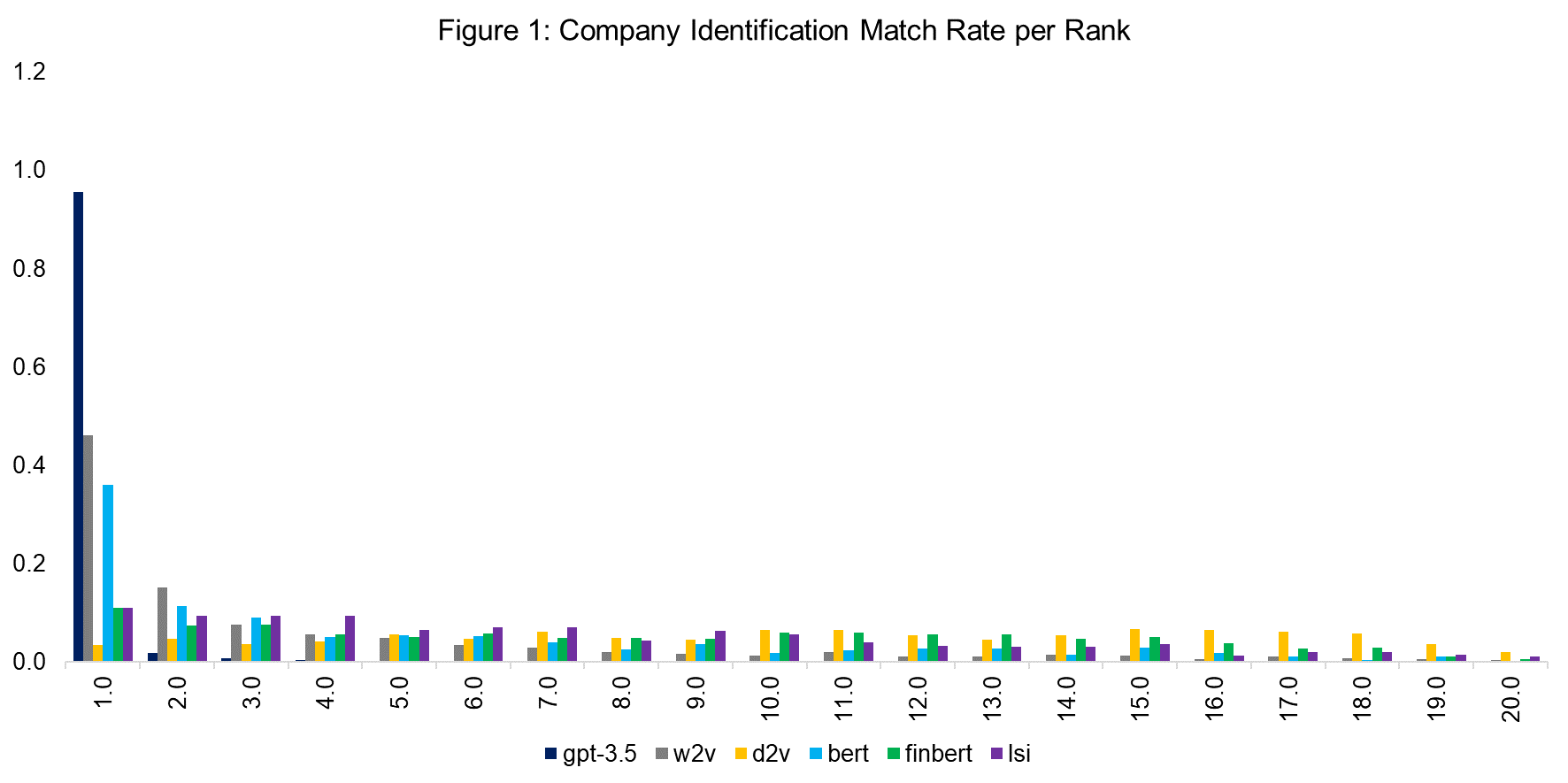

The analysis of company identification using different word embedding algorithms has revealed some interesting findings.

The study measures the match rate for each rank bucket, with the top similarity bucket having the highest match rate. The match rate for each bucket was found to be monotonically decreasing, indicating that both high and low similarity scores contain information about company likelihood. We observe that GPT-3.5 embeddings outperform all other algorithms. Using embeddings generated by the GPT-3.5 model, there is an approximate 95% likelihood that the company ranked as number 1 is indeed the correct or actual company. It suggests a high probability that the top-ranked company aligns with the intended target.

Interestingly, we found that Word2Vec consistently performed better than BERT at each rank and FinBERT, despite being trained on financial text, exhibited subpar performance in its explanatory capabilities.

3.2 EARNINGS SURPRISE EXPLANATION

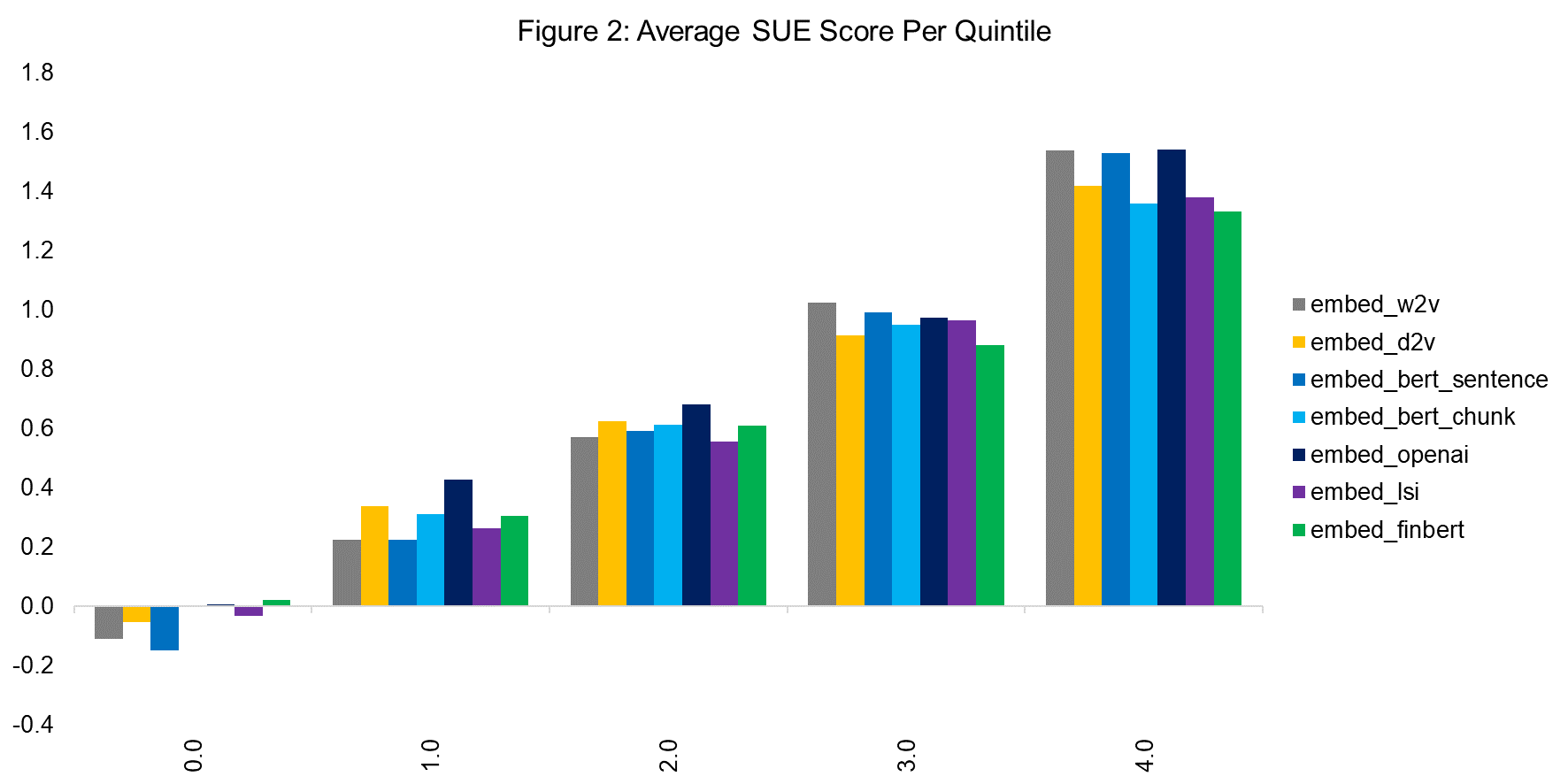

As discussed, we train linear models each month to create expected SUE scores using earnings call embeddings. We expect there to be a strong relationship between expected and actual SUE scores. To visualize the strength of this relationship across the different language models, we divided the expected SUE scores into quintiles each month.

Figure 2 shows the average actual SUE score per expected SUE score quintile. We expect this quintile chart to be monotonically increasing, exhibiting the embeddings’ ability to explain earnings surprises. This quintile chart shows all embeddings tested are useful in the exercise of explaining earnings surprises. If we look only at the separation between the first and last quintile’s average actual SUE scores, it suggests the BERT embeddings are most useful in explaining earnings surprises.

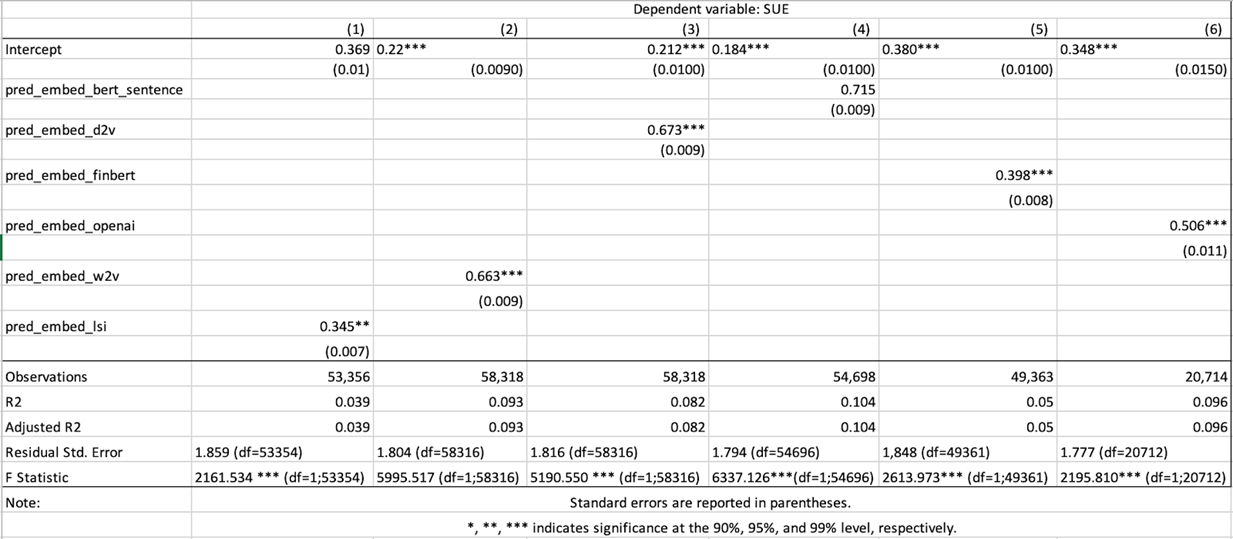

We further evaluate the explanatory power of the different embeddings over earnings surprises by evaluating regressions fits. In the table below we compare regression results when regressing the actual SUE scores on expected SUE scores under each language model.

Table 1: Regression Results for SUE and Different Embedding Algorithms

When looking at only the R-squared values, the results suggest BERT average sentence embeddings are most effective at explaining earnings surprises. This is further supported by the magnitude of the expected SUE score variable coefficient in the BERT fit versus the fits for the other language models. All fits show that there is a statistically significant positive relationship between the expected and actual surprise scores.

This suggests that no matter what language model is used, there are clear linguistic clues in earnings calls relating to the magnitude and direction of the earnings surprise. It confirms that executives’ speech is different depending on firm performance relative to expectations. Our study suggests BERT is the most effective language model in representing these linguistic cues in earnings calls.

When comparing the different language models’ quality, the earnings calls embeddings created using BERT, Word2Vec and OpenAI’s GPT-3.5 all perform well in the tasks of company identification and earnings surprise explanation. GPT-3.5 embeddings are the strongest performer in the task of company identification, while BERT embeddings seem to perform best in explaining earnings surprises using earnings call transcript information. It’s important to note that the number of observations used in the GPT-3.5 experiments is significantly less than the other language models due to the cost of embedding creation.

4. CONCLUSION

In this work, we surveyed different word embedding algorithms based on their performance on two financial tasks that are centered around capturing semantic relationships in financial text.

In our first task, we observed that embeddings generated by GPT-3.5 have outperformed other algorithms. However, it’s worth noting that simpler algorithms like Word2Vec also exhibited strong performance in this specific task. For our second task, we found that linear models utilizing document embeddings from various language models effectively explained standardized unexpected earnings. This indicates that language models can effectively understand the explanations given by executives to explain firm performance. For this task as well, GPT-3.5 embeddings have consistently performed better than all the other algorithms in explaining earnings surprises followed by Word2Vec and BERT.

Based on our survey, it appears that sophisticated models such as GPT and BERT may not be necessary for certain tasks, as Word2Vec demonstrated comparable performance. Another interesting observation is that the performance of FinBERT, a financial text-trained model, fell short of expectations; understandable given that the model has performed well predominantly on sentiment analysis related tasks [13].

Therefore, it is crucial to carefully consider the specific requirements of the task before selecting which algorithms to employ, as different algorithms may excel in different scenarios and may also be cost-effective. Exploring text to find relationships between different financial documents and company returns could be an interesting direction for future work.

5. REFERENCES

[1] Radford , Alec, et al. “Improving Language Understanding by Generative Pre-Training.” Openai.Com, 11 June 2018, cdn.openai.com/researchcovers/languageunsupervised/language_understanding_paper.pdf.

[2] OpenAI. “GPT-4 Technical Report.” arXiv.Org, 27 Mar. 2023, arxiv.org/abs/2303.08774.

[3] Cohen, Lauren, et al. “Lazy Prices.” SSRN, 14 Aug. 2010, ssrn.com/abstract=1658471.

[4] Lee, Joshua. “Can Investors Detect Managers’ Lack of Spontaneity? Adherence to Predetermined Scripts during Earnings Conference Calls.” American Accounting Association, 1 Jan. 2016, publications.aaahq.org/accountingreview/article-abstract/91/1/229/3799/Can-Investors- DetectManagers-Lack-of-Spontaneity?redirectedFrom=fulltext.

[5] Huang, Allen, et al. “FinBERT- a Large Language Model for Extracting Information from Financial Text.” SSRN, 27 Aug. 2021, ssrn.com/abstract=3910214.

[6] Lopez-Lira, Alejandro, and Yuehua Tang. “Can CHATGPT Forecast Stock Price Movements? Return Predictability and Large Language Models.” arXiv.Org, 22 Apr. 2023, arxiv.org/abs/2304.07619.

[7] Bird, Steven, et al. “Natural Language Processing with Python.” O’Reilly Online Learning, June 2019, www.oreilly.com/library/view/natural-languageprocessing/9780596803346/.

[8] Mikolov, Tomas, et al. “Efficient Estimation of Word Representations in Vector Space.” arXiv.Org, 7 Sept. 2013, arxiv.org/abs/1301.3781.

[9] Le , Quoc, and Tomas Mikolov. “Distributed Representations of Sentences and Documents.” Arxiv.Org, 22 May 2014, arxiv.org/pdf/1405.4053.pdf.

[10] Papadimitriou , Christos H, et al. “Latent Semantic Indexing: A Probabilistic Analysis.” Journal of Computer and System Sciences, 25 May 2002,

www.sciencedirect.com/science/article/pii/S0022000000917112.

[11] “Sentence-Transformers (Sentence Transformers).” Sentence-Transformers (Sentence Transformers), huggingface.co/sentence-transformers.

[12] Greene , Ryan, et al. New and Improved Embedding Model, 15 Dec. 2022, openai.com/blog/new-andimproved-embedding-model.

[13] “Prosusai/Finbert · Hugging Face.” ProsusAI/Finbert Hugging Face, huggingface.co/ProsusAI/finbert.

6. RELATED RESEARCH

Smart Money, Crowd Intelligence, and AI

AI, What Have You Done for Me Lately?

ABOUT THE AUTHORS

Wachi Bandara is the CIO of Asset Management for Exos Financial. Prior to joining Exos, Wachi was the CIO and Head of Research at Pluribus Labs where he led the investment team and executed on the Pluribus Labs vision to become the global leader in the use of qualitative data in a systematic investment process. Prior to that, he co-founded a data science and machine-learning start-up focused on investment management, which was eventually acquired by Golden Gate Capital. Wachi started his career as a machine-learning researcher focused on computer vision. Wachi holds a PhD and an MS in Finance from The George Washington University, an MS in Applied Mathematics from the Florida Institute of Technology, and a BS in Mathematics from the University of Colombo, Sri Lanka. He also holds the Financial Risk Manager certification from the Global Association of Risk Professionals.

Connect with me on LinkedIn.

Anshuma Chandak is a Data Scientist at Exos Financial. She uses NLP to analyze vast amounts of text for identifying emerging risks and opportunities for portfolio management. She has previously worked as an AI Research Scientist at Accenture Labs in San Jose, California, where she built AI/ML systems in varied enterprise areas. While in this role, she also led the Responsible AI program in North America contributing to Accenture’s thought leadership in ethical use of AI. She has authored 5 patents in the area of ML and NLP, and serves as a technical reviewer and program committee member at some of the top AI conferences. Prior to Accenture, she worked at Expedia, IBM, Reserve Bank of India, and Goldman Sachs. She holds a Master’s in Statistics from Columbia University.

Connect with me on LinkedIn.

Brandon Flannery is a Quantitative Researcher specializing in NLP with a focus on machine learning models at Exos Financial. Prior to that, Brandon was at Pluribus Labs LLC where he worked on developing ML models for alpha insights and modernizing financial products. His prior roles included building data onboarding pipelines, creating data acquisition tools, and developing ML/NLP models for predicting outcomes. He also contributed to alpha evaluation tools. Brandon’s expertise and passion drive innovation in quantitative research, contributing to intelligent financial products and insights. He has a BA in Statistics from UC Berkeley.

Connect with me on LinkedIn.